Continuous-wave Time-of-Flight (ToF) sensors are able to robustly measure the scene depth in real-time. The success of this kind of systems is given by their benefits, e.g., the simplicity of processing operations for the estimation of the depth maps, the absence of moving components, the possibility to generate a dense depth map, the absence of artifacts due to occlusions and scene texture. Beside these good aspects, ToF cameras have also some limitations for which they need to be further analyzed and improved. Some of these limitations are a low spatial resolution, the presence of a maximum measurable distance, estimation artifacts on the edges and corners and the wrong depth estimation due to the Multi-Path Interference (MPI) phenomenon. We propose different approaches to correct this problem and improve depth estimation from ToF data.

Furthermore we also considered heterogeneous acquisition systems, made of two high resolution standard cameras and one ToF camera. We derived a probabilistic and learned fusion algorithms that allow us to obtain high quality depth information from the information of both devices.

Key research topics include:

- We firstly propose to use a Convolutional Neural Network (CNN), trained on synthetic data, to estimate and correct the error due to the MPI corruption. We use data acquired with a multi-frequency ToF camera as input for the CNN, exploiting the frequency diversity of this phenomenon to estimate it.

- We introduced a novel unsupervised domain adaptation method to improve the ToF denoising performance on real data without using real world depth ground truth.

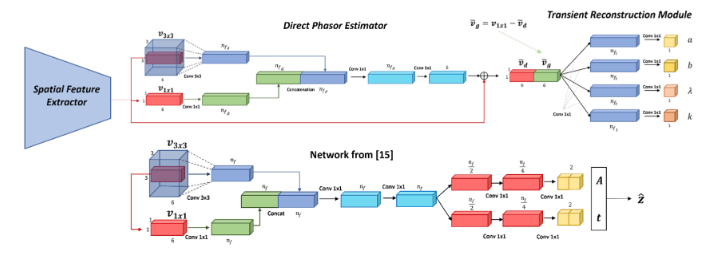

- We introduced approaches for the estimation of the complete back-scattering vector information describing the light arrival time allowing for a more accurate MPI removal with smaller deep learning models.

- We also propose to use a modified ToF camera that illuminates the scene with a spatialy modulated sine pattern.

- We derived a probabilistic framework for stereo-ToF fusion, using an advanced measurement error model accounting for the mixed pixels effect together with a global MAP-MRF optimization scheme using an extended version of Loopy Belief Propagation with site-dependent labels.

- We also exploited a different approach for the fusion of the two data sources that extends the locally consistent framework used in stereo vision to the case where two different depth data sources are available.

- The approach is further extended by exploiting a Convolutional Neural Network for the estimation of the confidence information. A novel synthetic dataset has also been constructed and used for the training of the deep network.

Selected publications:

Lightweight Deep Learning Architecture for MPI Correction and Transient Reconstruction Journal Article

In: IEEE Transactions on Computational Imaging, vol. 8, pp. 721–732, 2022.

Unsupervised domain adaptation of deep networks for ToF depth refinement Journal Article

In: IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 12, pp. 9195–9208, 2021.

Stereo and ToF data fusion by learning from synthetic data Journal Article

In: Information Fusion, vol. 49, pp. 161–173, 2019.

Unsupervised domain adaptation for tof data denoising with adversarial learning Proceedings Article

In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5584–5593, 2019.

Confidence estimation for ToF and stereo sensors and its application to depth data fusion Journal Article

In: IEEE Sensors Journal, vol. 20, no. 3, pp. 1411–1421, 2019.

Deep learning for multi-path error removal in ToF sensors Proceedings Article

In: Proceedings of the European Conference on Computer Vision (ECCV) Workshops, pp. 0–0, 2018.

Combination of spatially-modulated ToF and structured light for MPI-free depth estimation Proceedings Article

In: Proceedings of the European Conference on Computer Vision (ECCV) Workshops, pp. 0–0, 2018.

Probabilistic tof and stereo data fusion based on mixed pixels measurement models Journal Article

In: IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 37, no. 11, pp. 2260–2272, 2015.